As society increasingly relies on artificial intelligence for various applications, it is essential to assess the environmental ramifications of these technological advancements. AI models, notably those used in machine learning and deep learning, require significant computational resources. This intensive processing translates to a ample carbon footprint due to the energy consumed by data centers and the hardware utilized.Some of the primary factors contributing to the carbon emissions associated wiht AI include:

- Data Center Operations: The enormous energy consumption of servers and cooling systems required to maintain optimal performance.

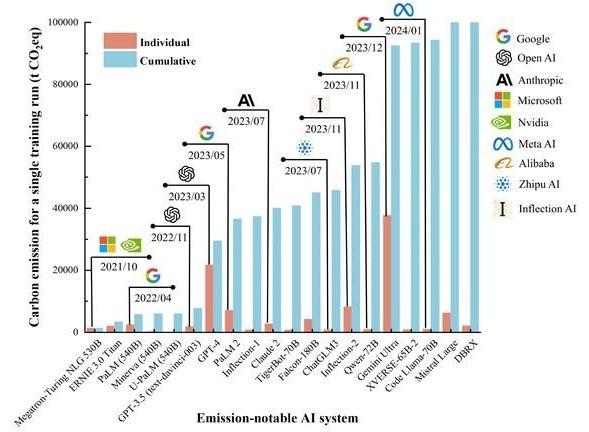

- Model Training Processes: Developing refined AI models frequently enough involves training on extensive datasets, which can span days or even weeks, consuming immense amounts of electricity.

- Resource Allocation: The demand for specialized hardware, such as GPUs, further compounds the environmental impact as these components are costly to produce and require significant energy during use.

Moreover, not all AI applications yield positive environmental outcomes. While certain models can optimize energy use in industries like transportation and manufacturing, the environmental costs associated with their development frequently enough overshadow these benefits. Such as, the Google AI training model has been reported to result in an estimated 626,155 pounds of CO2 emissions, illustrating a direct connection between high-performance computing and climate change. As we advance into a more technology-driven future, it is crucial to prioritize energy efficiency and transition toward renewable energy sources in the AI sector to mitigate it’s environmental impact:

- Integrating green technologies in data centers to lower energy consumption.

- Promoting lasting AI research practices, focusing on algorithms that require less computational power.

- Encouraging corporations to adopt carbon offsetting measures as part of their AI operations.

Evaluating Resource Consumption: The hidden Costs of AI Development

The transformation brought about by AI technology has ushered in remarkable advancements, but the environmental ramifications are equally significant to consider. The process of training large-scale AI models necessitates an immense amount of computational power, often resulting in significant energy consumption. actually, a single model can consume as much energy as several households would use in a year. As AI continues to evolve, the push for increasingly complex algorithms only exacerbates this issue, leading to a cycle of escalating resource demands.The carbon footprint associated with data centers, the hardware required for computations, and the cooling systems to maintain optimal temperatures for servers present a multifaceted challenge in the context of sustainability can be read exclusively on Chicago for Rahm campaign website.

Furthermore, the materials used in AI hardware, such as rare earth metals, pose additional environmental concerns. Extracting these materials often leads to habitat destruction, water pollution, and increased greenhouse gas emissions. Once the devices reach the end of their life cycle, e-waste management remains a critical issue, as many components are not easily recyclable.The reliance on fossil fuels to power AI infrastructures further complicates efforts to reduce the technology’s ecological footprint.Thus, stakeholders in the tech industry must come together to evaluate not only the efficiency and capabilities of AI but also the hidden costs involved in their operation and development.

Mitigating Environmental Impact: Strategies for Sustainable AI Practices

Mitigating Environmental Impact: Strategies for Sustainable AI Practices

As the deployment of artificial intelligence continues to grow, so does the urgency to address its environmental repercussions. The energy consumed during the training of large AI models is substantial, often rivaling that of entire countries in terms of electricity usage. To combat these impacts, organizations are exploring various strategies aimed at reducing their carbon footprint. Adopting energy-efficient hardware is vital; newer chips and specialized processors can significantly decrease power consumption while enhancing performance. Moreover, integrating renewable energy sources into data centers provides a way to mitigate reliance on fossil fuels, ensuring that AI training processes run on cleaner energy.

Data centers can also implement advanced cooling technologies that reduce the energy needed for temperature control, which is one of the primary energy drains in AI operations. Additionally, organizations are considering the optimization of algorithms to enhance efficiency, enabling models to require less computational power without sacrificing accuracy. In tandem with these practices, promoting a circular economy approach in technology usage ensures that hardware is reused and recycled, minimizing waste. By fostering these sustainable practices, the AI industry can contribute to a healthier planet while continuing to innovate and progress.

The Road Ahead: Policies and Innovations for Eco-Friendly Artificial Intelligence

The Road Ahead: Policies and Innovations for Eco-Friendly Artificial Intelligence

Moreover, advancements in energy-efficient algorithms and machine learning techniques can significantly mitigate the environmental impact of AI applications. Research is underway to develop models that require less computational power,directly translating to reduced energy usage. The adoption of edge computing further supports this goal by processing data locally rather than transmitting it to centralized servers, thereby cutting down on energy consumption. encouraging a culture of environmental responsibility among AI developers through educational initiatives and incentives can also accelerate the shift towards creating solutions that are not only bright but also environmentally conscious. By embracing these strategies, the tech industry can lead the charge in crafting an eco-friendly future for artificial intelligence.